Software engineer

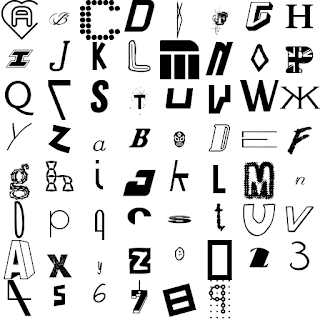

Erik Bernhardsson took a sample of 50,000 fonts, with characters as varied as shown in the compilation above, and looked for basic underlying structure with a

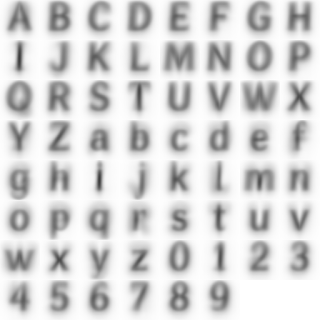

neural network. A neural network is statistically a linear combination of nonlinear functions of linear combinations of input variables. Here, the input variables are digital images of each font character expressed as vectors. Iterative adjustment, termed learning, is applied to produce a linear combination of the inputs. An output estimate of the input character is computed from the other set linear coefficients. All coefficients are chosen to minimize a measure of lack of fit. Bernhardsson then looked at the mean and median of the resulting output characters.

Mean of all the output fonts.

Median of all the output fonts.

Note how readable the mean and median fonts are, when the individual input fonts are extremely varied, as shown above in the first image. He goes on to interpolate fonts, apply random perturbations, and even generate new fonts by sampling from a multivariate normal distribution of the font vectors.

No comments:

Post a Comment